Trends/January 26, 2023

AI Product Photography is Already Better Than You Think

Alfred

Co-founder

Businesses are already using AI tools to generate images to build their brand and sell their products. Don't get left behind.

If you haven’t been paying attention to the AI space, you might think AI image generation is still for the AI geeks creating fancy art.

I have bad news for you. You will be left behind soon.

Businesses are already using AI tools to generate images to build their brand and sell their products. For example, GothRider®’s Belle™ Coffee ad campaign is entirely driven by AI. Founder Phil Kyprianou generated the image with Midjourney and added his product and copy with another design tool.

And this example is only scratching the surface of what AI tools can do for product photography.

Maybe that made you feel nervous. Or excited.

Either way, let me catch you up on the state of AI product photography and what we can expect in the near future so that you can leverage it for your business. More than 25,000 creatives use our AI product photography tool Pebblely since we launched three weeks ago, and we are having dozens of conversations about AI product photography with our business customers every day.

AI tools can generate much better product images than you think

AI tools can already generate high-quality product images that are almost indistinguishable from professional product photoshoots—and in some cases even better. It isn’t as mediocre as slapping a product on an image.

While generalized AI image generation tools, such as Midjourney, tend to change the shape of a product or alter the text on the product, specialized AI product image tools, like Pebblely, can preserve the details of the product.

Specialized AI product image tools will add appropriate shadows and reflections so that the product blends with the background.

They can generate settings that are prohibitively expensive or impossible to create in real life.

They can even create images with humans and animals in the background at no extra cost.

And all these only required a single product image and a text description.

There are also AI tools like Scale Forge that let you upload a few photos of your product and generate images of your product from different angles in different settings. And tools like ClipDrop let you change the lighting and shadows of images using AI.

Admittedly, AI-generated images are not perfect. Traditional photoshoot photos, Photoshopped images, and 3D rendering aren’t too. But like them, AI product photos have reached a point where they are good enough for most consumers.

AI product photography is a better option for many businesses

If you had unlimited money and time, you would hire professional photographers, go to different locations to shoot, and have designers edit the shots. You might even have art directors to decide the creative direction.

But most businesses make trade-offs between speed, quality, and cost.

Let's go through these factors:

- Time (speed)

- Control and creativity (quality)

- Scale and cost (cost)

1. Time

AI tools take only a few clicks and a few seconds to generate a new image. And this will become even faster with more engineering optimization and increased processing power.

In comparison, photoshoots often take the entire day and you have to schedule a few days in advance and prepare for it. Even if you are great with Photoshop or 3D rendering, it will still take you a longer time. If you are outsourcing it to a freelancer or agency, don’t forget to consider the back-and-forth emails and calls.

Granted, it takes time to learn how to use AI tools but the learning curve for photography, Photoshop, and 3D rendering is much steeper.

2. Control

You might argue you have more control with a photoshoot or using Photoshop or 3D rendering. You are right to a certain extent.

AI tools are not advanced enough to understand exactly what you want. They often require you to type a string of seemingly random keywords in a format that the AI understands (e.g. “on a wooden table, lemons, oranges, photorealistic, unreal engine, ultrahd, 4k, professional photography” instead of “a high-quality image of my product on a wooden table with lemons and oranges”). Even then, the AI might not generate the exact image you want.

But that is changing.

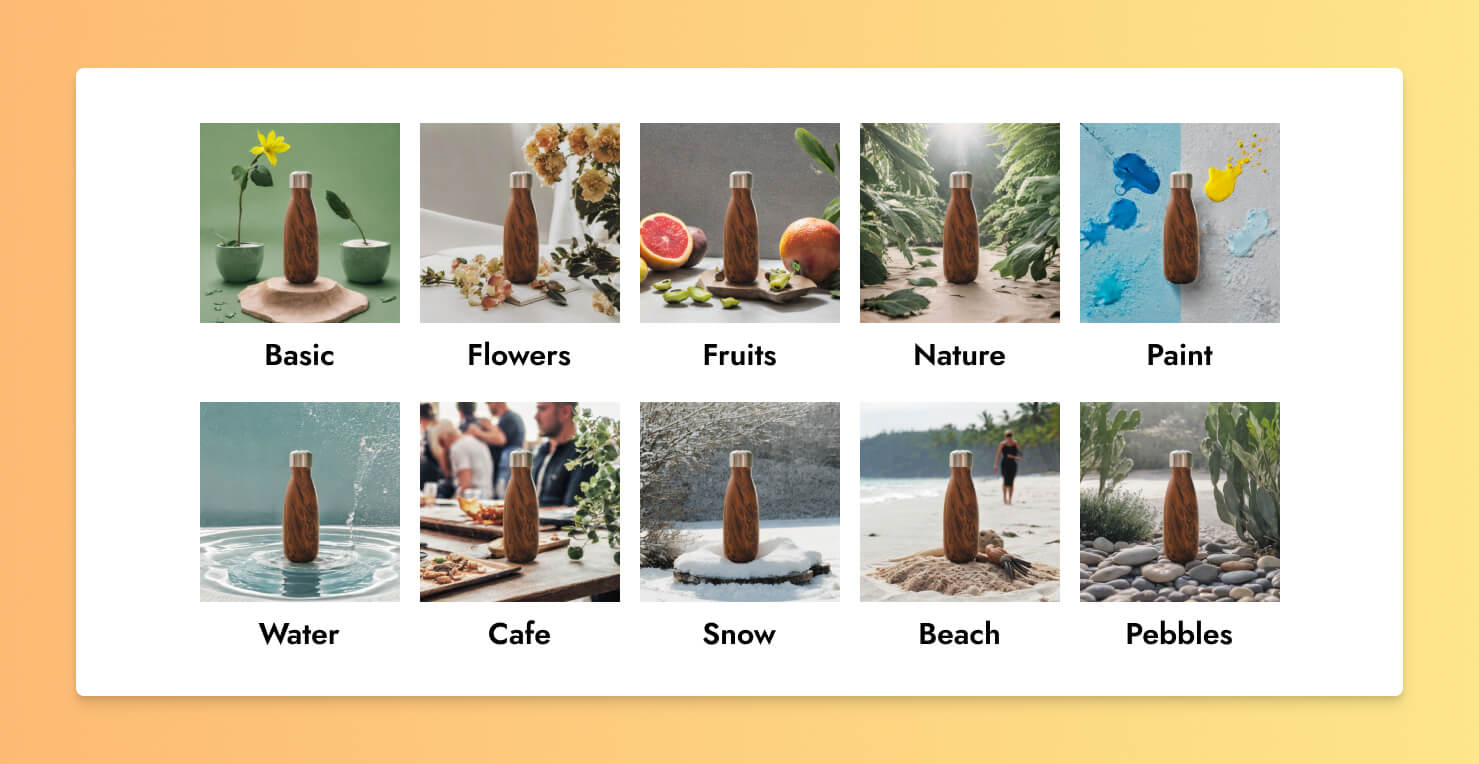

One, AI tools are introducing friendlier user interfaces, such as forms and dropdowns, to help even non-tech-savvy users instruct the AI. At Pebblely, we made it even simpler. Users can simply pick from a list of themes, such as flowers, cafe, or beach, to get images of their product in those settings.

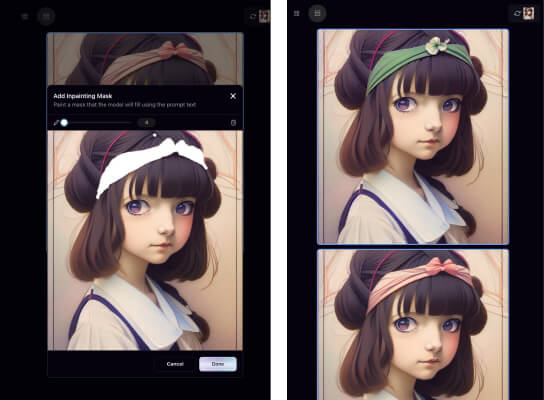

Two, some AI tools, such as Playground AI, allow you to erase parts of a generated image and re-generate something new there until you are satisfied. You can remove a flower, add a shadow, change a table into a chair, and more.

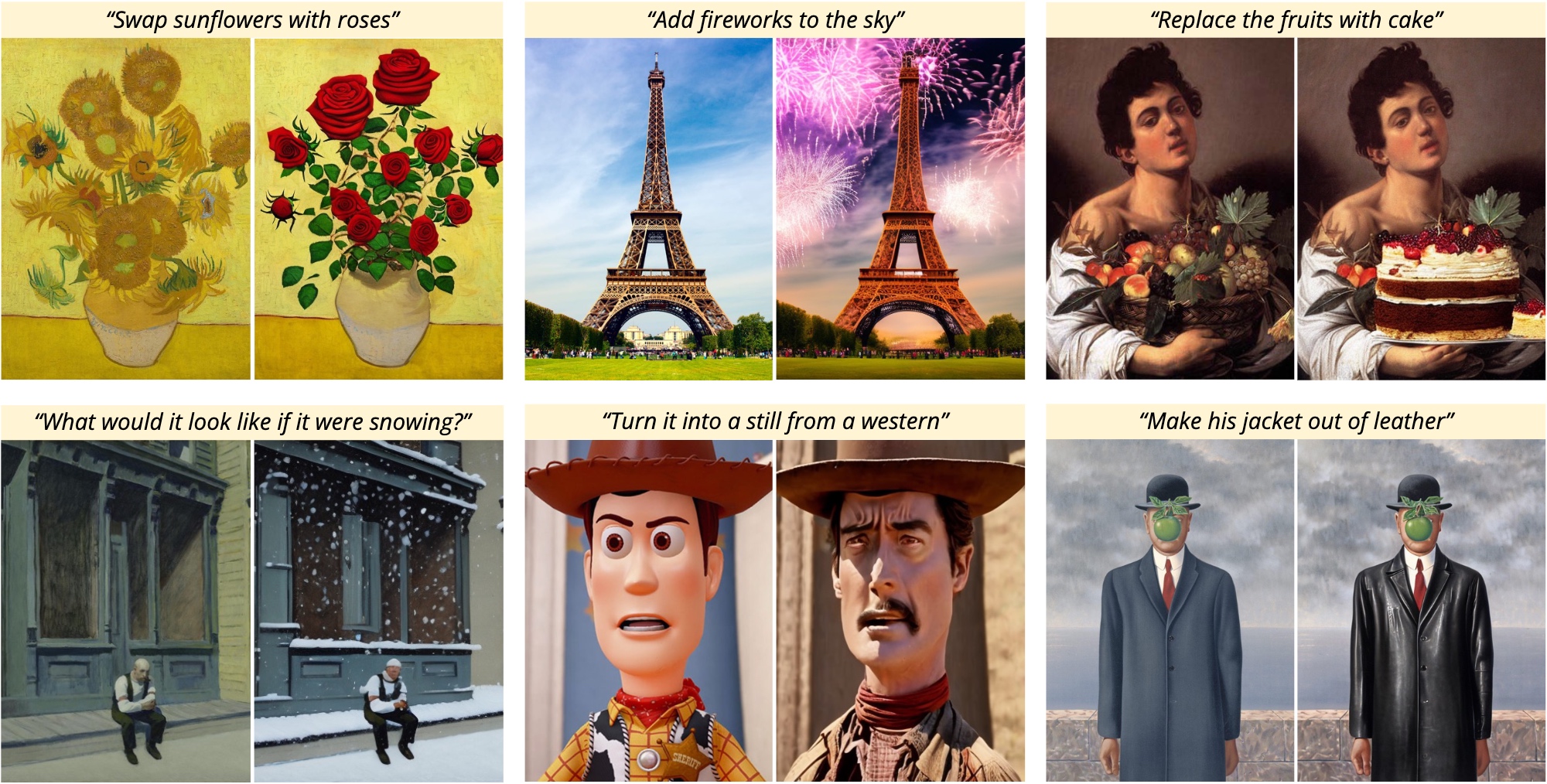

Three, just like you enter a text description to generate an image, you can now enter a text description into some AI tools to edit an image.

We expect these functionalities to be common among AI product photography tools eventually, just like how photo-editing apps have similar functionalities.

3. Creativity

This might be a weird dimension to consider but let me explain. When you have a photoshoot, you might have certain ideas for the photos. Or you might depend on the photographer or a creative director to come up with ideas for the shoot. AI tools can also help you with that.

For example, when I was recreating the product images of top perfume brands, I wanted a futuristic and sci-fi-like image for Paco Rabanne Phantom. But I didn’t know how to visualize that. So in Pebblely, I entered the description, “standing in a dark room, beside a lemon slice, vanilla stalk, lavender stalk, at night, cyberpunk, futuristic, sci-fi, neon lights”. Pebblely generated fascinating ideas that I hadn’t thought of, such as the futuristic portal, spaceship, and moon.

4. Scale

Because generating new images is as simple as clicking a button, AI tools allow you to get new images at a scale that is hard to achieve with traditional means.

- If you want variations of a particular image or style, you only have to click “Generate” again.

- If you want a different setting, you only have to change your description. Yes, you might need to experiment with different descriptions to get what you want. But compare that to a traditional photoshoot where you would have to move to an entirely different location.

- Because the images are programmatically generated, you can technically generate hundreds of images for hundreds of products—automatically and all at once. Well, you could also hire hundreds of photographers but that will be exorbitant.

5. Cost

Finally, we come to cost. Just as an example, Pebblely is $19 per month, and you can generate unlimited images. Photoshoots can cost thousands of dollars, excluding the time cost of preparing for them and actually shooting. The costs of Photoshop and 3D renderings are somewhere in the middle.

AI-generated images are already cheaper than traditional ones by several orders of magnitude. And it will only become cheaper as processing power grows. And if Moore’s Law were to hold and it has since the 1970s, the processing power of computers doubles every two years.

But AI product photography has its challenges

Surely AI product photography has some limitations? Definitely! But most of them will be solved in the coming years.

Hallucinations

A small, annoying problem with many AI tools, including Pebblely, is the AI would sometimes add unwanted parts to a product. For example, it might add a cap to a skincare serum, a wheel to a pram, or a gold chain to a ring. If you are curious, these unwanted parts are known as “artifacts”, and this problem is “hallucination”, as in the AI thinks the additional part exists when it doesn’t.

This is a solvable problem, though. By letting the AI “see” (technically, train on) more images of those products, it will less likely to hallucinate because it “knows” how the products should look. As we work with actual businesses, we have been improving our AI model every week to understand objects of different shapes better.

Unrealistic humans and fingers

Even the most funded and advanced AI companies are struggling to generate images of humans properly. We see other humans regularly and would instinctively pick up any subtle oddities in images, even if we cannot explain them. Like the eyes or ears just don’t feel right. And as silly as this might sound, AI models are especially terrible with fingers. AI-generated humans tend to have more or fewer than five fingers on each hand.

But the industry is making good progress on this too. Lexica Art, another image-generation AI tool, can generate fairly realistic images of humans. And Protogen, an open-source AI model, can produce humans with near-perfect fingers.

One trick, for now though, is to create close-up shots with blurry humans in the background so that you cannot see the issues with the generated humans.

Weird fashion photos

Finally, the combination of the two problems above makes AI fashion photos hard. AI tools cannot generate realistic humans, and they don’t understand cloth sizes and fit well yet. AI-generated images for fashion often look like badly Photoshopped images.

That being said, we are seeing bigger companies, such as Vue.ai, trying to tackle this problem already. So it is technically possible but too expensive for most businesses right now. We have also been exploring different solutions and have gotten reasonable results from transferring a piece of clothing from one model to another model.

Beyond photography

Despite the challenges with AI product photography, we are confident many of the issues above will be solved in the next 1-2 years.

Just two years ago, OpenAI announced DALL-E, allowing us to create images from text descriptions—for the first time in history. A year later, they followed up with DALL-E 2, which can generate more realistic and accurate images. Also, last August and November, Stability AI released the open-source Stable Diffusion V1 and V2, which led to an explosion of AI tools such as Midjourney and Pebblely. With more funding and resources being poured into AI by big companies, like OpenAI and Microsoft, and venture capitalists, the development will likely get even faster.

But getting high-quality product images is a means to an end. In the end, businesses need to sell products. You need more than just the images. Unlike traditional methods, AI product photography tools can extend further, such as:

- Generating images with text, such as product infographics

- Turning images into videos, which are more engaging

- Plugging into ad platforms or businesses’ websites and automatically updating images based on clickthrough and conversion rates

All these are technically possible already. As the adoption of AI product photography grows, we will see more and more tools solving not just AI product photography problems but also these business problems. Keep an eye out!

Keep learning

10 Ecommerce AI Tools to Grow Your Business

AI isn't just for writing. Here are tools that use artificial intelligence or machine learning to help you with product images, customer service, retention, and more.

Top Marketers Are Already Using AI. Why Are You Not?

Here is why you should be learning to use AI tools in your day-to-day work, and how to get started.